Most AI measurement systems focus on technical performance while missing basic safety and fairness evaluations. While 75% of AI initiatives fail, organizations continue reporting success based on narrow performance metrics alone, like tracking uptime while ignoring response quality.

AI systems require evaluation across seven NIST trustworthiness characteristics that traditional metrics miss completely: validity, safety, security, accountability, explainability, privacy enhancement, and fairness. Organizations that evaluate trustworthiness before deployment avoid the drift and inconsistencies that destroy user trust.

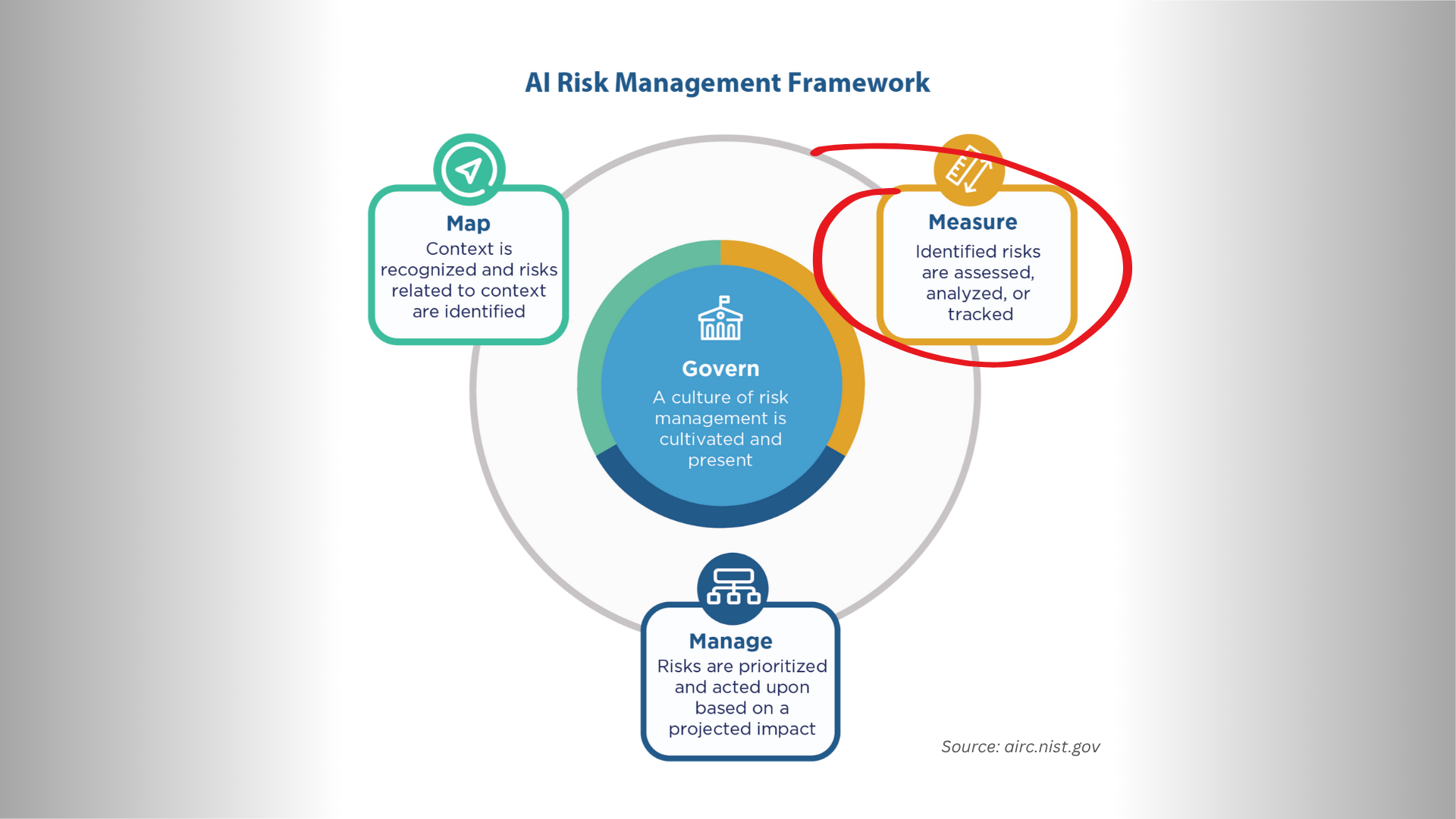

The NIST AI RMF MEASURE function transforms measurement from performance theater into systematic trustworthiness evaluation. Instead of beautiful dashboards showing perfect technical metrics, organizations build frameworks that assess whether AI systems actually work safely in production.

This is Part 3 of the four-part NIST AI RMF implementation series. The part where you stop relying on convenience metrics, and start measuring trust (and trustworthiness!) before your AI systems fail in ways that matter.